Optimizing loop diuretic treatment for mortality reduction in patients with acute dyspnea using a practical offline reinforcement learning pipeline for healthcare: a retrospective single center simulation study

Abstract

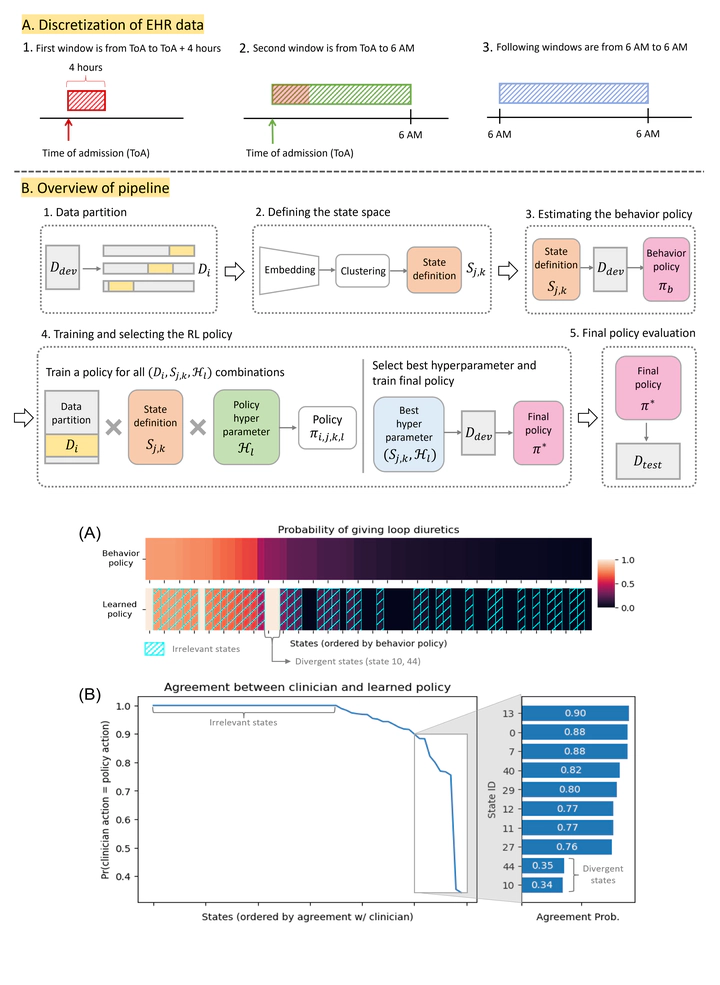

Background: Offline reinforcement learning (RL) has been increasingly applied to clinical decision-making problems. However, due to the lack of a standardized pipeline, prior work often relied on strategies that may lead to over-fitted policies and inaccurate evaluations. Objective: In this work, we present a practical pipeline – PROP-RL – designed to improve robustness and minimize disruption to clinical workflow. We demonstrate its efficacy in the context of learning treatment policies for administering loop diuretics in hospitalized patients. Methods: Our cohort included adult inpatients admitted to the emergency department at Michigan Medicine between 2015-2019 and required supplemental oxygen. We modeled the management of loop diuretics as an offline RL problem using a discrete state space based on features extracted from electronic health records, a binary action space corresponding to the daily use of loop diuretics, and a reward function based on in-hospital mortality. The policy was trained on data from 2015-2018 and evaluated on a held-out set of hospitalizations from 2019, in terms of estimated reduction in mortality compared to clinician behavior. Results: The final study cohort included 36,570 hospitalizations. The learned treatment policy was based on 60 states: the policy deferred to clinicians in 36 states, recommended the majority action in 22 states, and diverged significantly from clinician behavior in 2 of the states. Among the cases where the policy meaningfully diverged from the behavior policy, the learned policy was estimated to significantly reduce the mortality rate from 3.8% to 2.2% by 1.6% (95% CI: 0.4, 2.7; P=.006). Conclusions: We applied our pipeline on the clinical problem of loop diuretic treatment, highlighting the importance of robust state representation and thoughtful policy selection and evaluation. Our work reveals areas of potential improvement in current clinical care for loop diuretics and serves as a blueprint for using offline RL for sequential treatment selection in clinical settings.