Democratizing EHR Analyses with FIDDLE - A Flexible Data-Driven Preprocessing Pipeline for Structured Clinical Data

Abstract

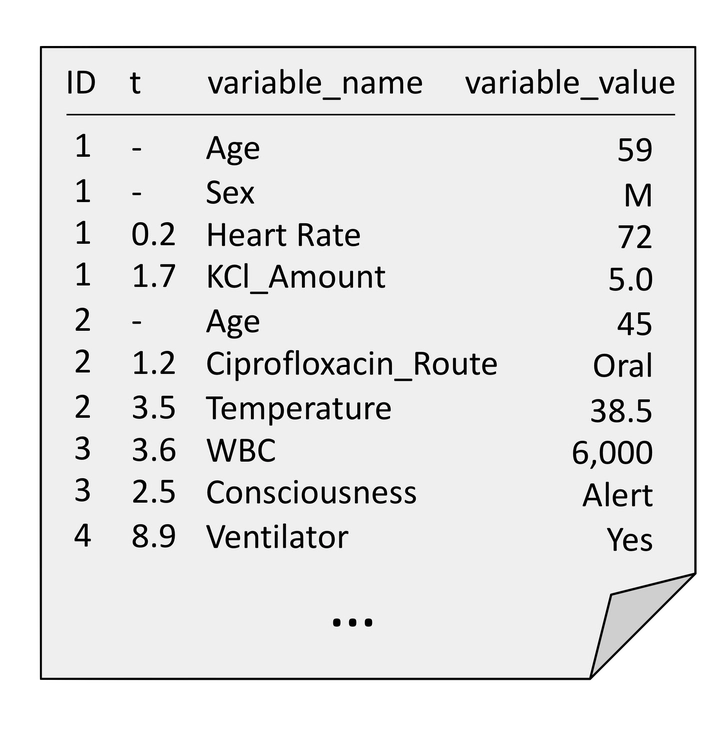

Objective. In applying machine learning (ML) to electronic health record (EHR) data, many decisions must be made before any ML is applied; such preprocessing requires substantial effort and can be labor-intensive. As the role of ML in health care grows, there is an increasing need for systematic and reproducible preprocessing techniques for EHR data. Thus, we developed FIDDLE (Flexible Data-Driven Pipeline), an open-source framework that streamlines the preprocessing of data extracted from the EHR.

Materials and Methods. Largely data-driven, FIDDLE systematically transforms structured EHR data into feature vectors, limiting the number of decisions a user must make while incorporating good practices from the literature. To demonstrate its utility and flexibility, we conducted a proof-of-concept experiment in which we applied FIDDLE to 2 publicly available EHR data sets collected from intensive care units: MIMIC-III and the eICU Collaborative Research Database. We trained different ML models to predict 3 clinically important outcomes: in-hospital mortality, acute respiratory failure, and shock. We evaluated models using the area under the receiver operating characteristics curve (AUROC), and compared it to several baselines.

Results. Across tasks, FIDDLE extracted 2,528 to 7,403 features from MIMIC-III and eICU, respectively. On all tasks, FIDDLE-based models achieved good discriminative performance, with AUROCs of 0.757–0.886, comparable to the performance of MIMIC-Extract, a preprocessing pipeline designed specifically for MIMIC-III. Furthermore, our results showed that FIDDLE is generalizable across different prediction times, ML algorithms, and data sets, while being relatively robust to different settings of user-defined arguments.

Conclusions. FIDDLE, an open-source preprocessing pipeline, facilitates applying ML to structured EHR data. By accelerating and standardizing labor-intensive preprocessing, FIDDLE can help stimulate progress in building clinically useful ML tools for EHR data.