Model Selection for Offline Reinforcement Learning: Practical Considerations for Healthcare Settings

Abstract

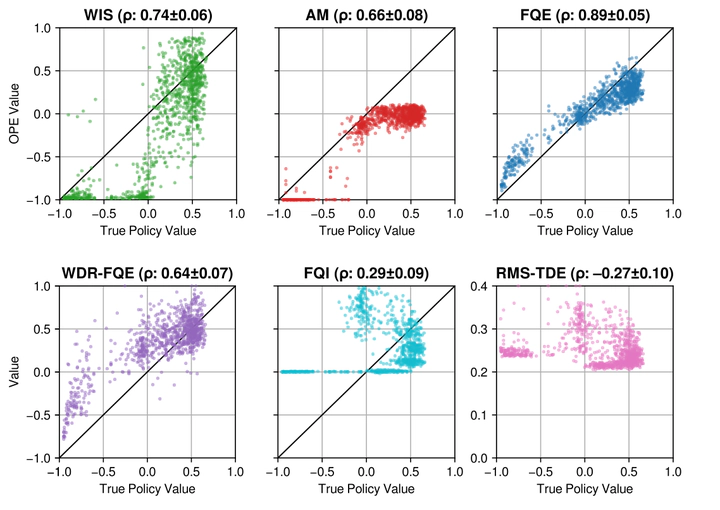

Reinforcement learning (RL) can be used to learn treatment policies and aid decision making in healthcare. However, given the need for generalization over complex state/action spaces, the incorporation of function approximators (e.g., deep neural networks) requires model selection to reduce overfitting and improve policy performance at deployment. Yet a standard validation pipeline for model selection requires running a learned policy in the actual environment, which is often infeasible in a healthcare setting. In this work, we investigate a model selection pipeline for offline RL that relies on off-policy evaluation (OPE) as a proxy for validation performance. We present an in-depth analysis of popular OPE methods, highlighting the additional hyperparameters and computational requirements (fitting/inference of auxiliary models) when used to rank a set of candidate policies. To compare the utility of different OPE methods as part of the model selection pipeline, we experiment with a clinical decision-making task of sepsis treatment. Among all the OPE methods, FQE is the most robust to different sampling conditions (with various sizes and data-generating behaviors) and consistently leads to the best validation ranking, but this comes with a high computational cost. To balance this trade-off between accuracy of ranking and computational efficiency, we propose a simple two-stage approach to accelerate model selection by avoiding potentially unnecessary computation. Our work represents an important first step towards enabling fairer comparisons in offline RL; it serves as a practical guide for offline RL model selection and can help RL practitioners in healthcare learn better policies on real-world datasets.